Abstract

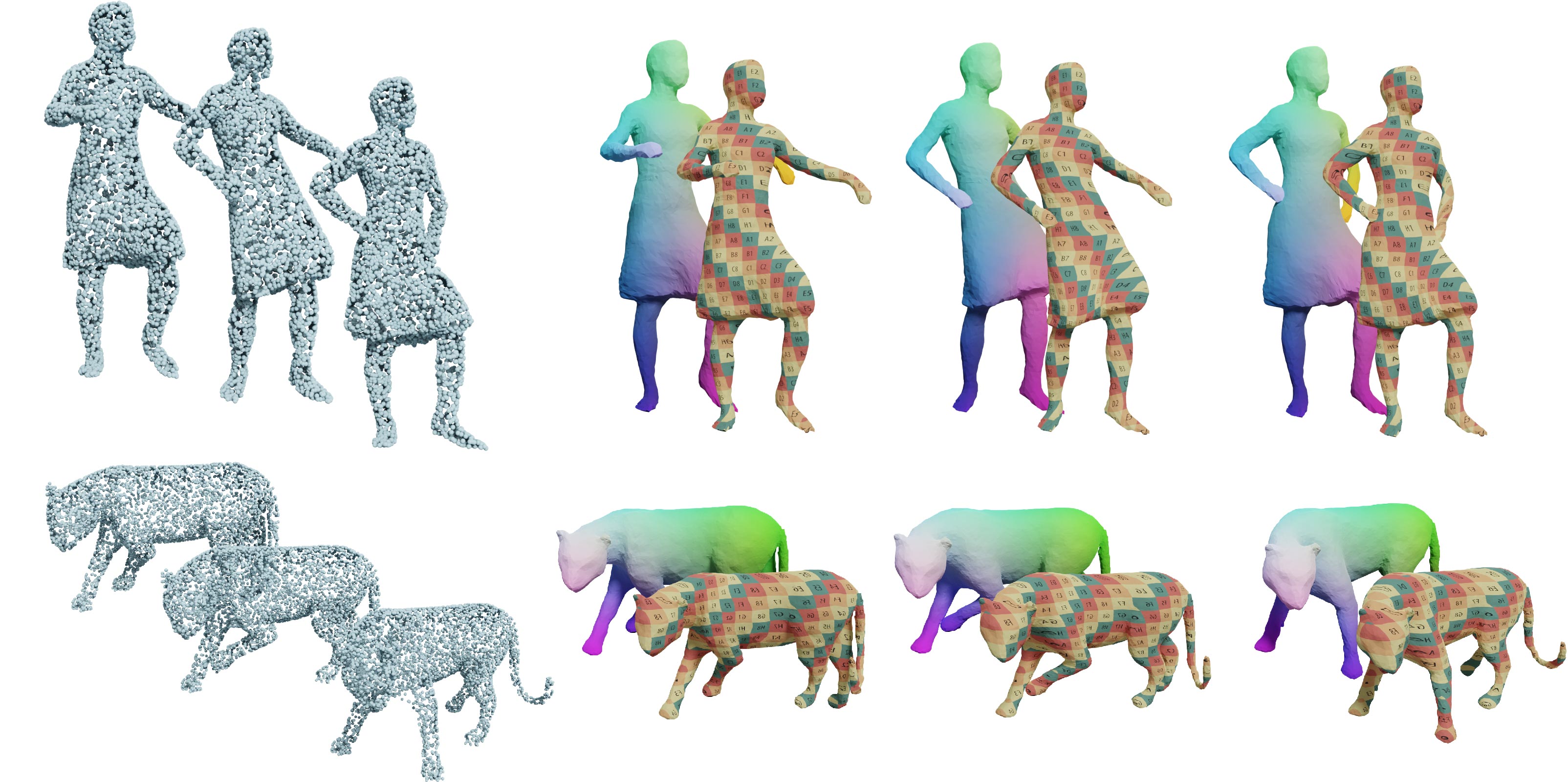

This paper explores the problem of reconstructing temporally consistent surfaces from a 3D point cloud sequence without correspondence. To address this challenging task, we propose DynoSurf, an unsupervised learning framework integrating a template surface representation with a learnable deformation field. Specifically, we design a coarse-to-fine strategy for learning the template surface based on the deformable tetrahedron representation. Furthermore, we propose a learnable deformation representation based on the learnable control points and blending weights, which can deform the template surface non-rigidly while maintaining the consistency of the local shape. Experimental results demonstrate the significant superiority of DynoSurf over current state-of-the-art approaches, showcasing its potential as a powerful tool for dynamic mesh reconstruction.

Method

Illustration of the proposed DynoSurf, which can reconstruct from time-varying point cloud sequences temporally-consistent dynamic surfaces without requiring any ground-truth surface and temporal correspondence information.

Results

Video

BibTeX

@article{yao2024dynosurf,

author = {Yao, Yuxin and Ren, Siyu and Hou, Junhui and Deng, Zhi and Zhang, Juyong and Wang, Wenping},

title = {DynoSurf: Neural Deformation-based Temporally Consistent Dynamic Surface Reconstruction},

journal = {Arxiv},

year = {2024},

}